Model Collapse (2024)

Zilkha South Gallery, Wesleyan University

Model Collapse is my Senior Studio Art Thesis Exhibition. The title “Model Collapse” is inspired by a paper studying the effect of training artificial intelligence on synthetic data, or artificially generated information. “Without enough fresh real data in each generation of an autophagous loop, future generative models are doomed to have their quality (precision) or diversity (recall) progressively decrease. We term this condition Model Autophagy Disorder (MAD), making analogy to mad cow disease.” https://arxiv.org/abs/2307.01850 After bearing physical witness to the autophagic process of Uveitis in my own body, I use autophagy as an entry point into complex contemporary relationships between information, data, and the personal. Model Collapse is an exploration of alternative indexical photographic processes and the relationships between information, physicality, and domesticity in their presentation.

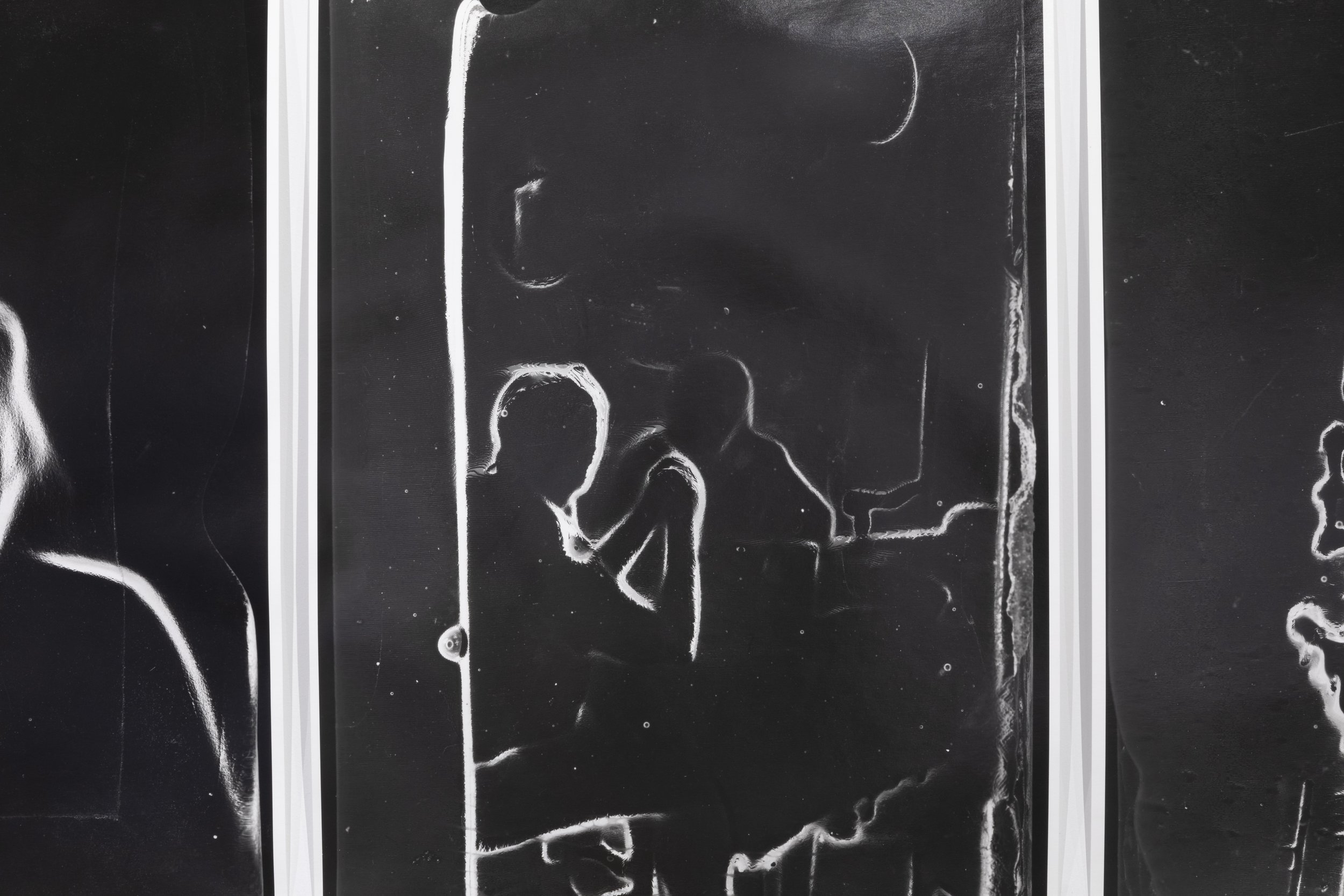

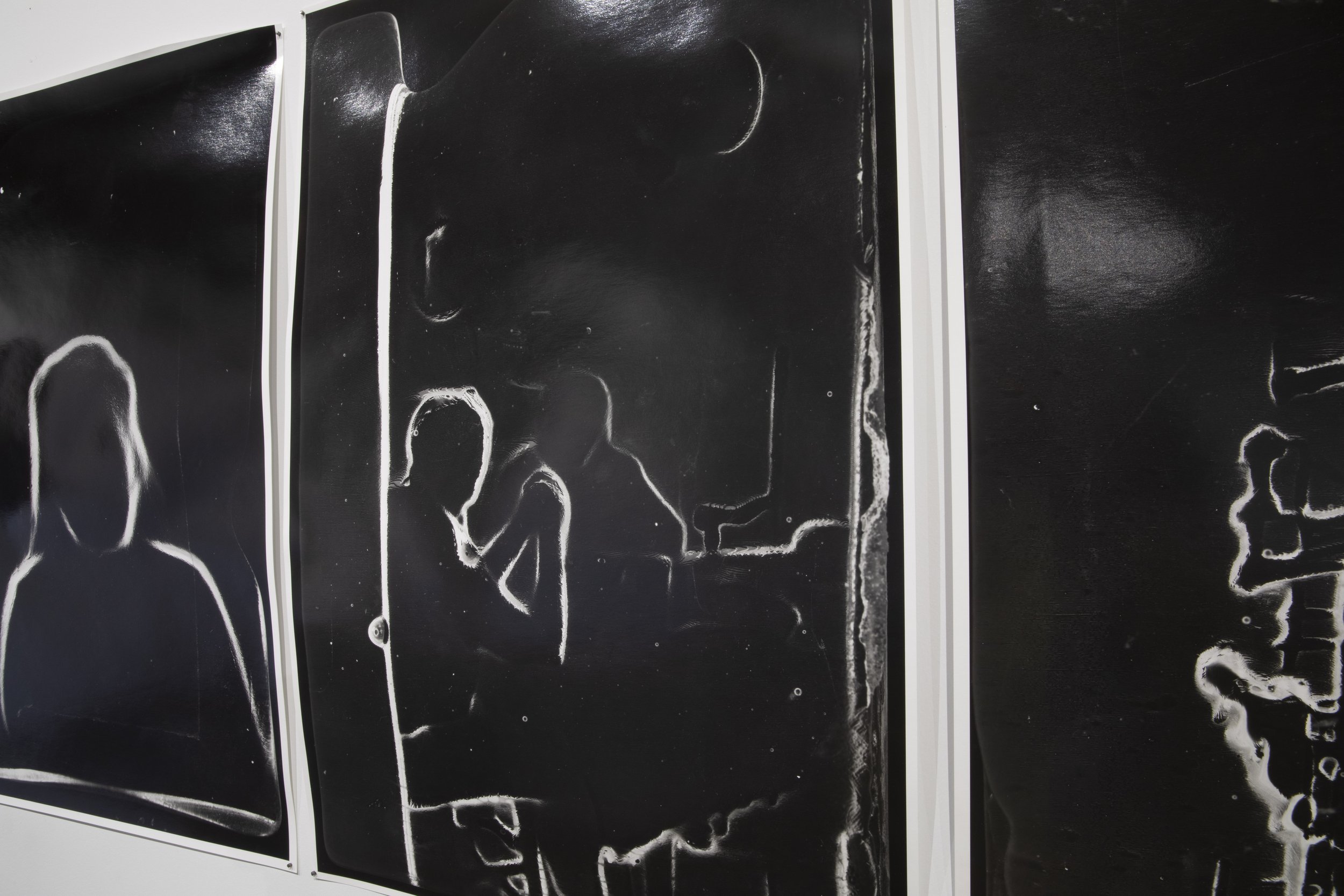

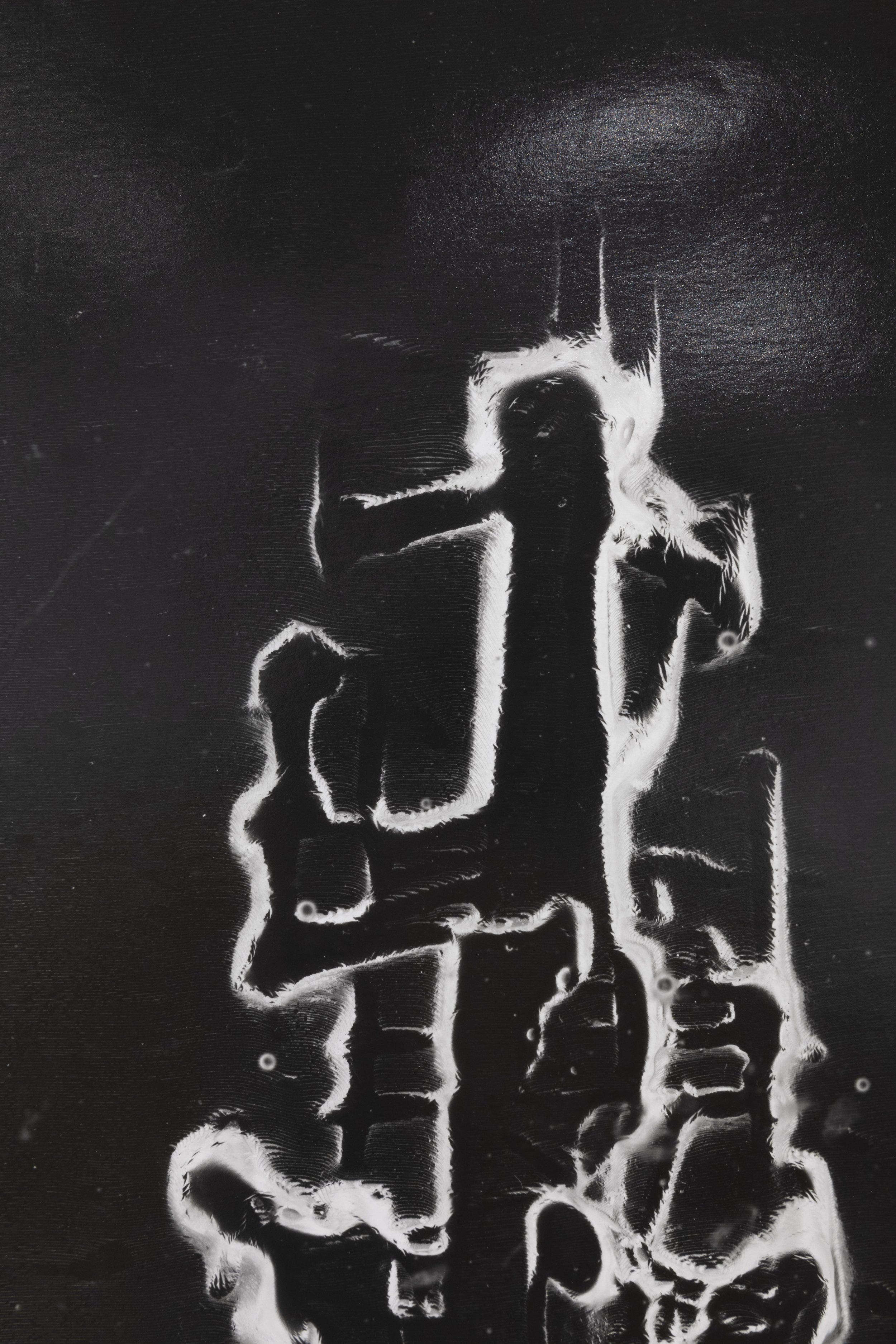

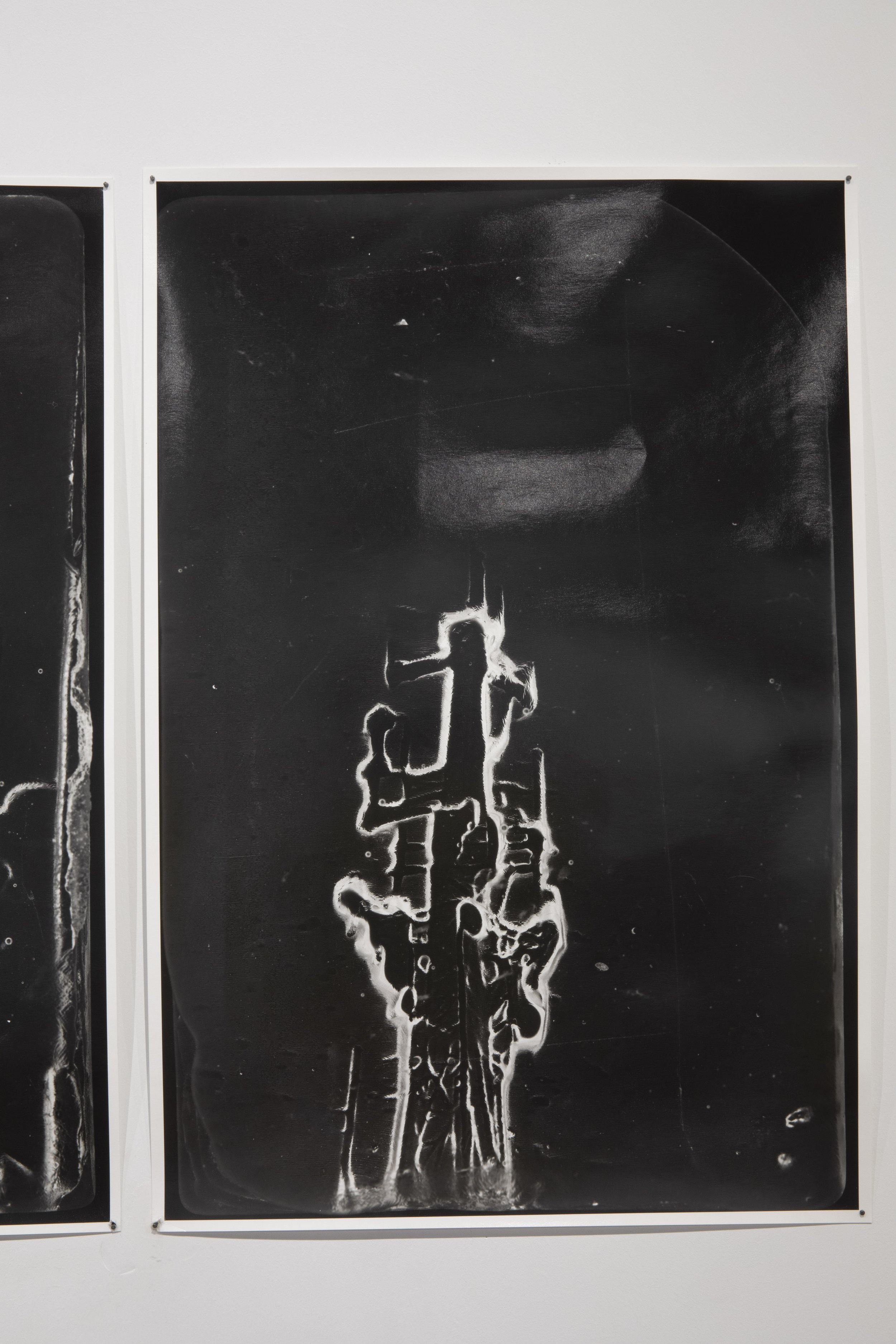

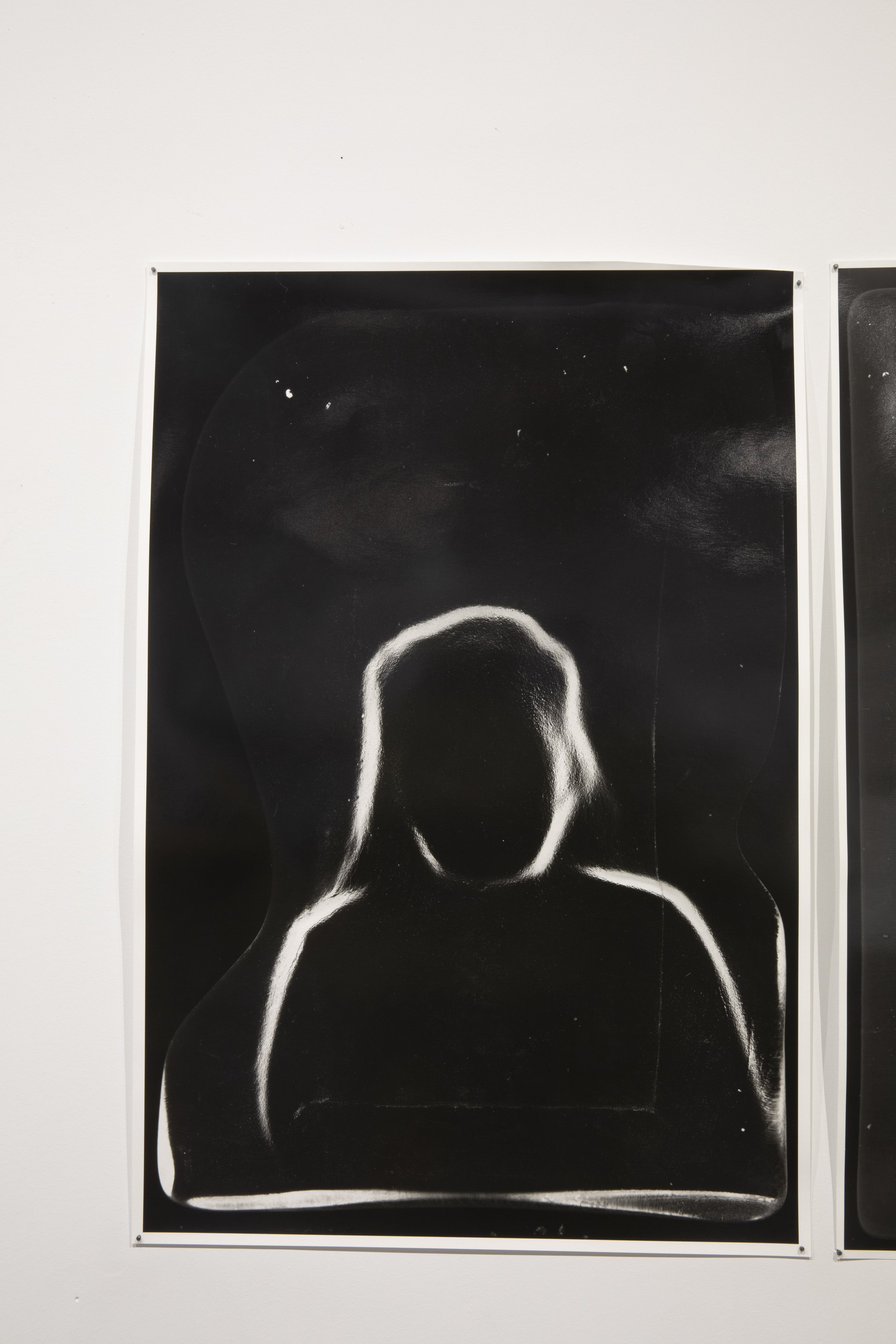

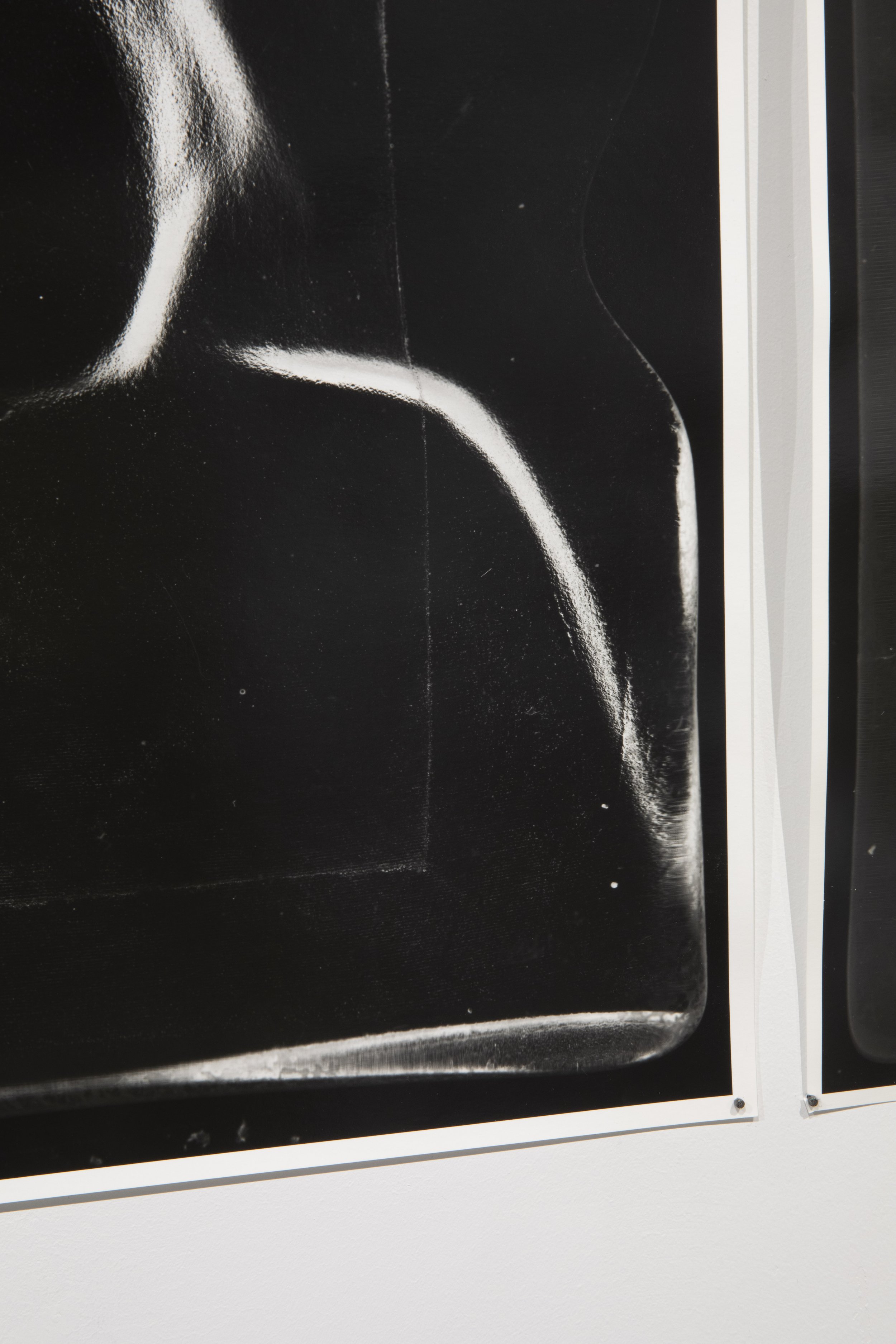

The first alternative process developed for this project led to the creation of pieces titled ZoeDepth2Glass. Titled after the algorithm utilized: arXiv:2302.12288, these pieces start with taking photographs, which I then digitize and store in some form. These images through the ZoeDepth algorithm, generate depth maps, which are then de-virtualized, and cast into glass. These objects are also used to create enlargements resulting in works like Autophagy (2024), a triptych of analog enlarged glass prints.

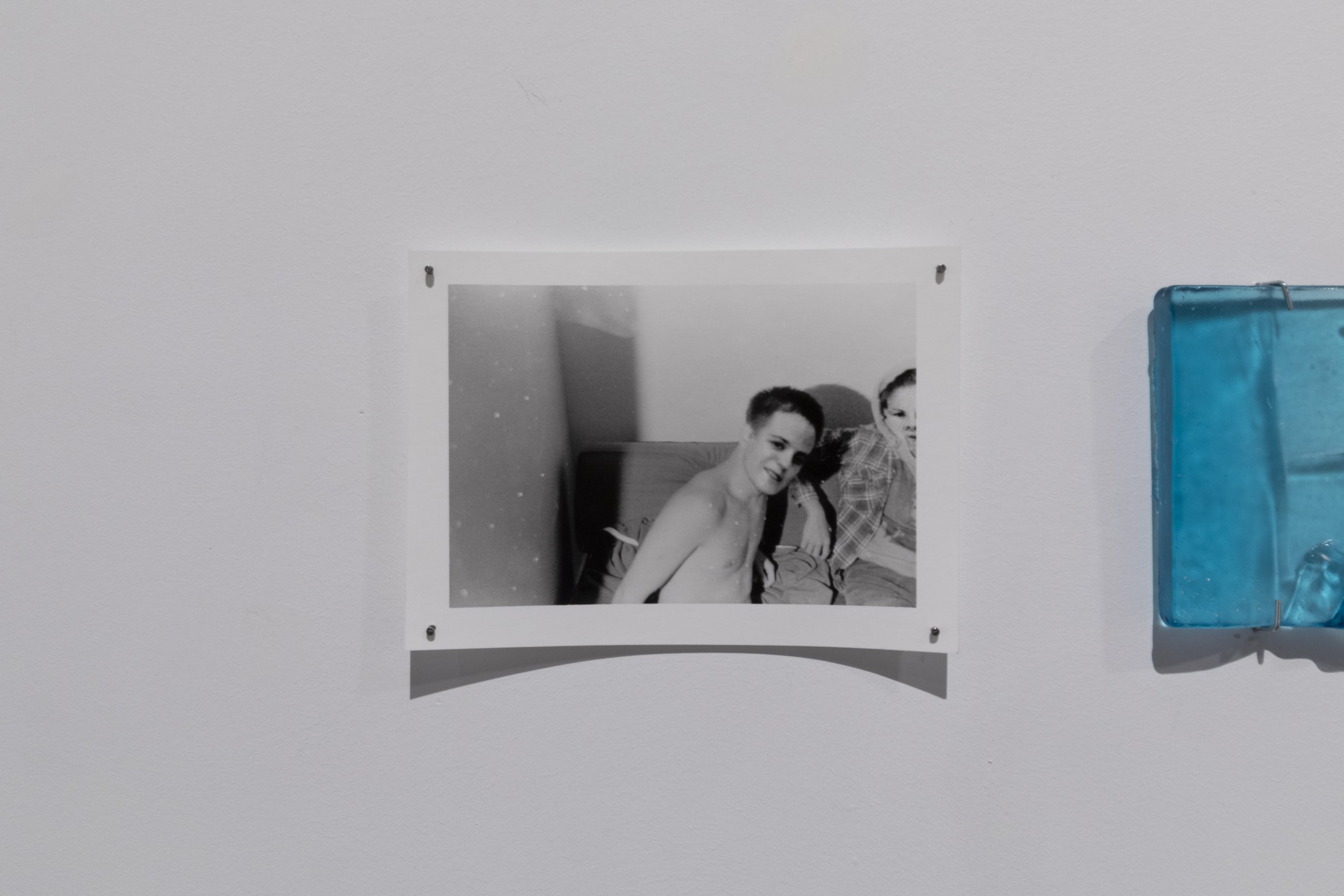

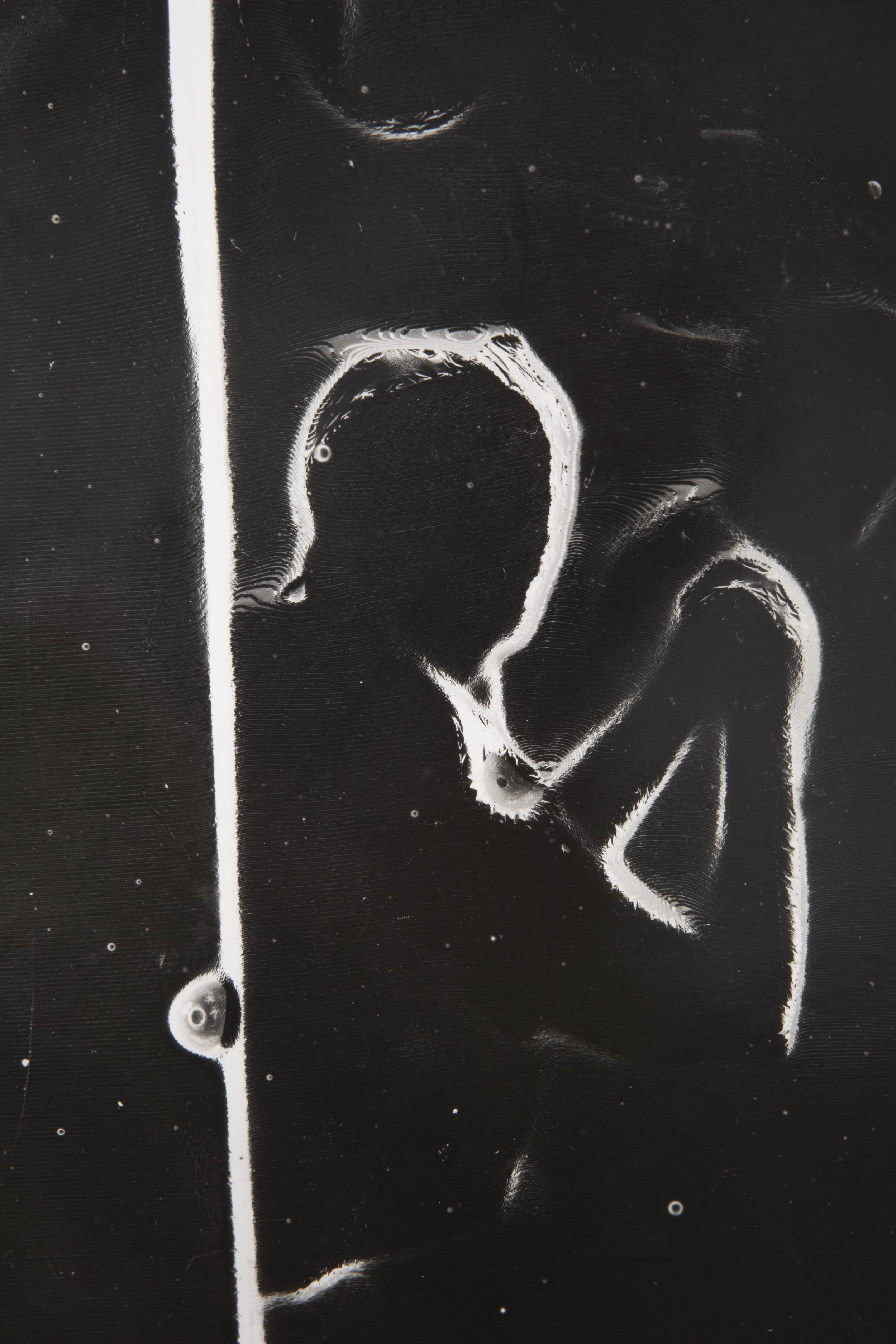

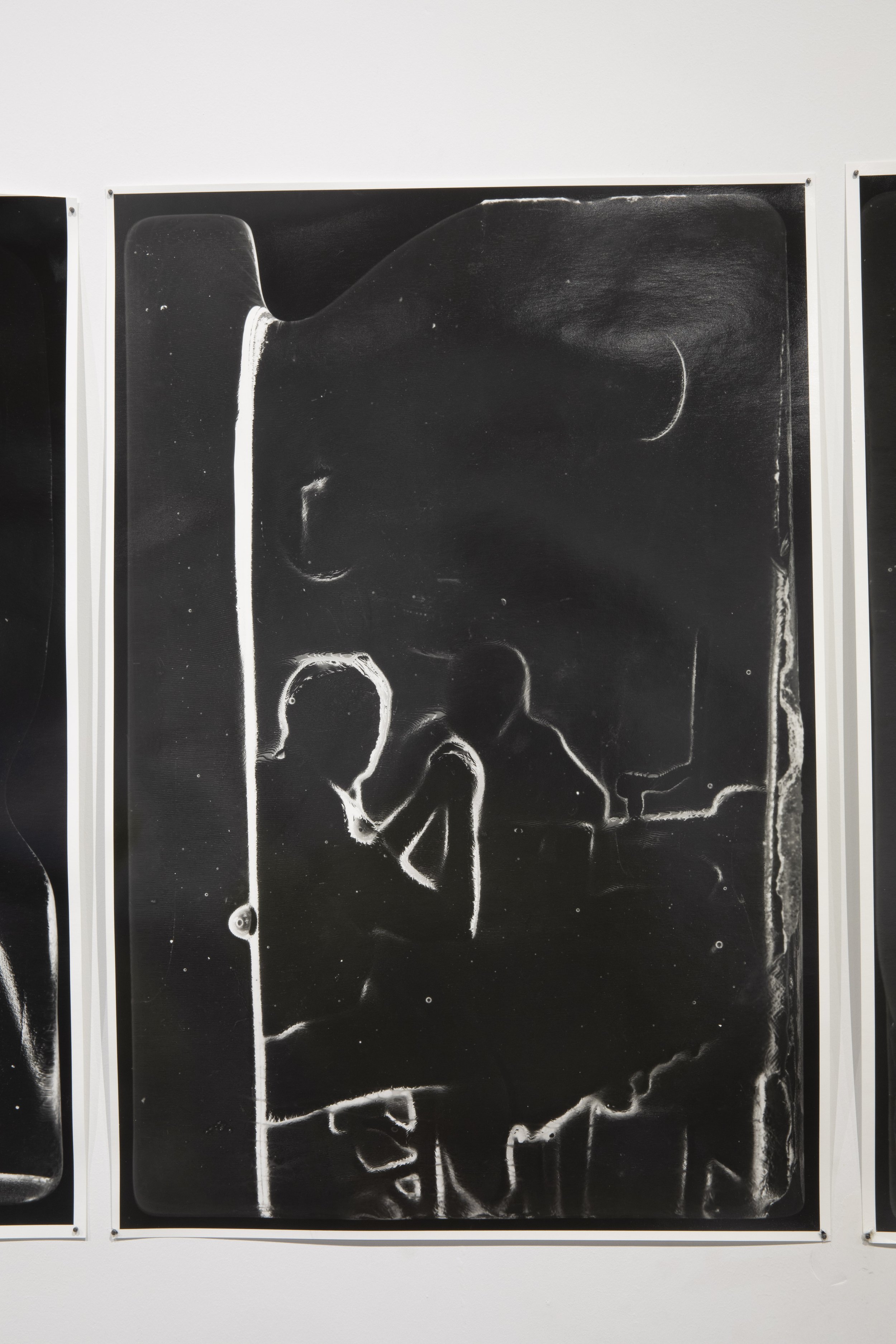

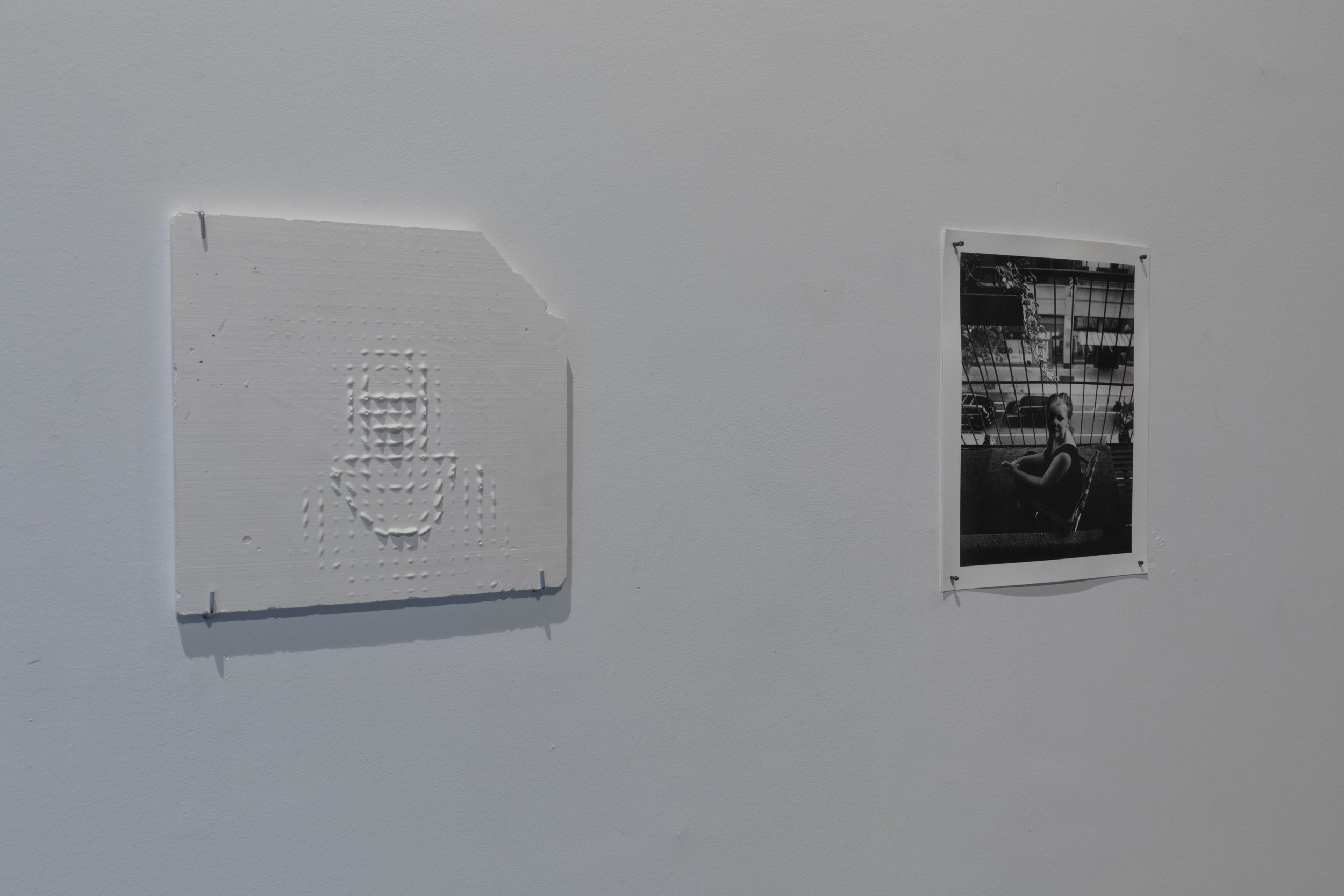

The second process led to the creation of works titled DeepPrivacy2Film. Similarly titled after the DeepPrivacy algorithm(arXiv:1909.04538), which identifies faces in images, erases them, and generates new faces to anonymize subjects. Recently utilized by celebrity Instagram publicity teams and other new applications, the algorithm attempts to erase information, replacing it with new, generic forms. These images are first taken on film of friends and family, they are then scanned, anonymized, and transferred back to 35mm black and white film and printed manually in the darkroom.

Thirdly, and least represented is the creation of HOG releifs. Histograms of Oriented Gradients(HOG) are a form of data storage that processes photographic data into direction and intensity information. This data is traditionally visualized through a black-and-white image, visualizing the directional and intensity values. Here I use that information and visualize it in physical space, allowing the physical information of the work to cast shadows.

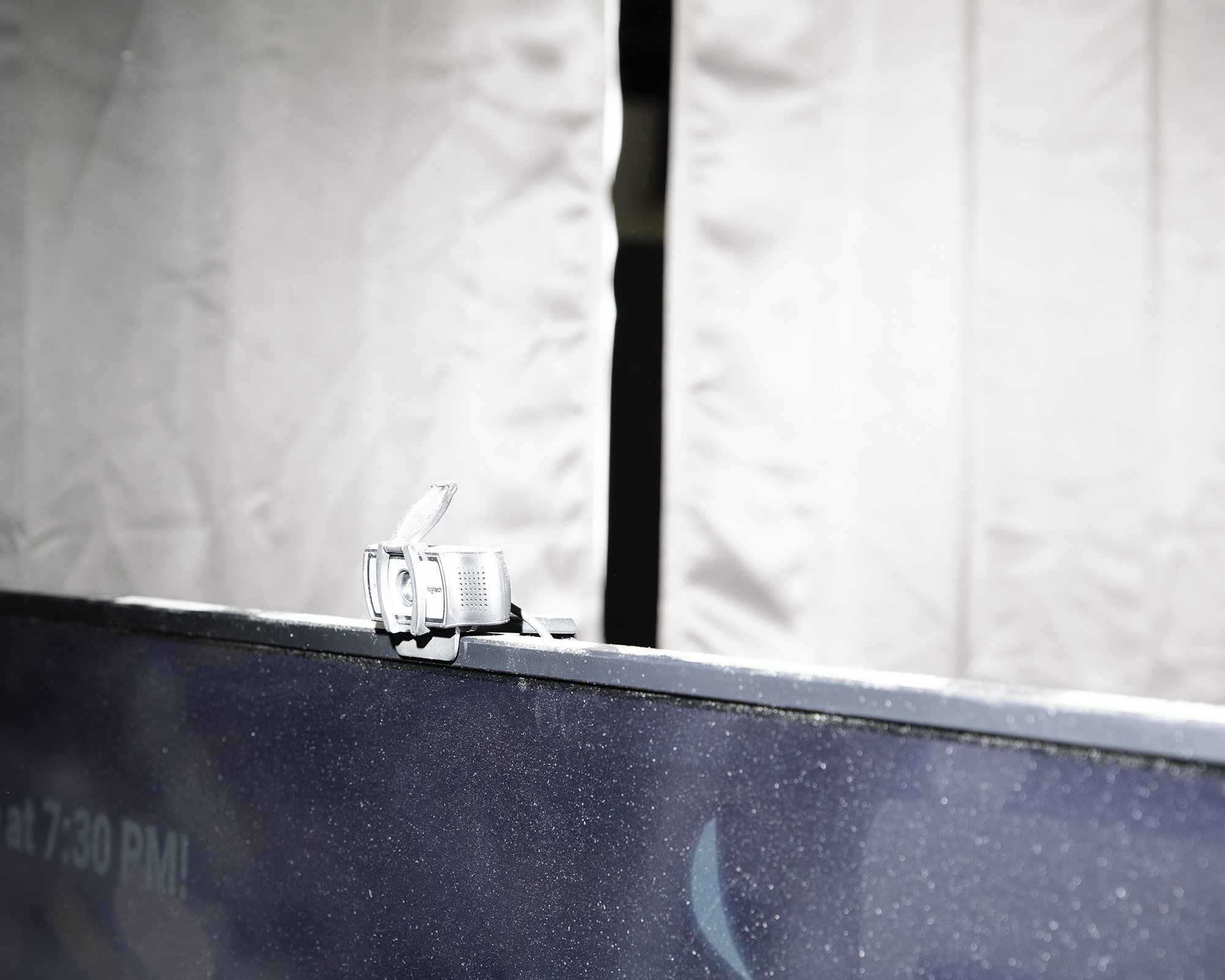

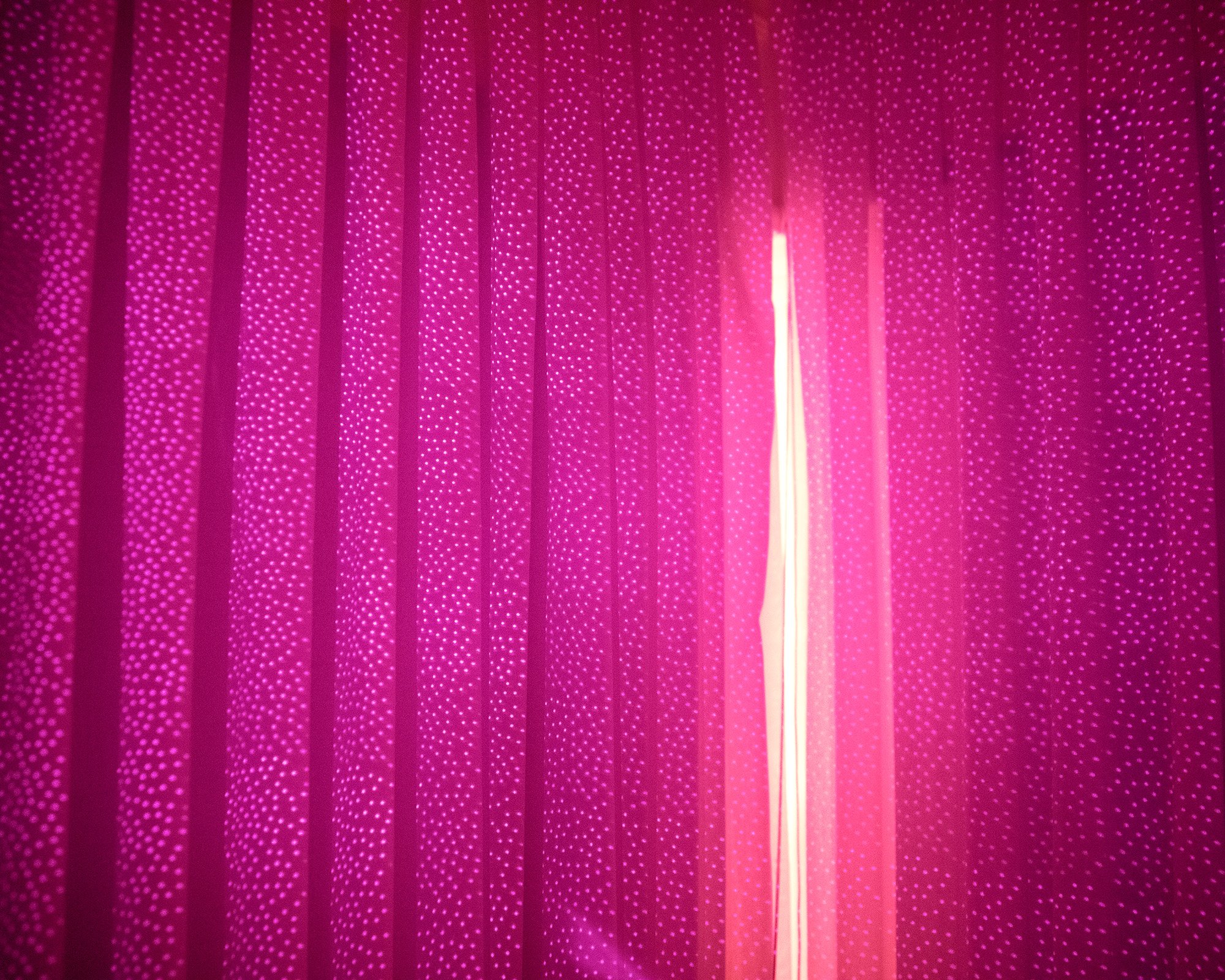

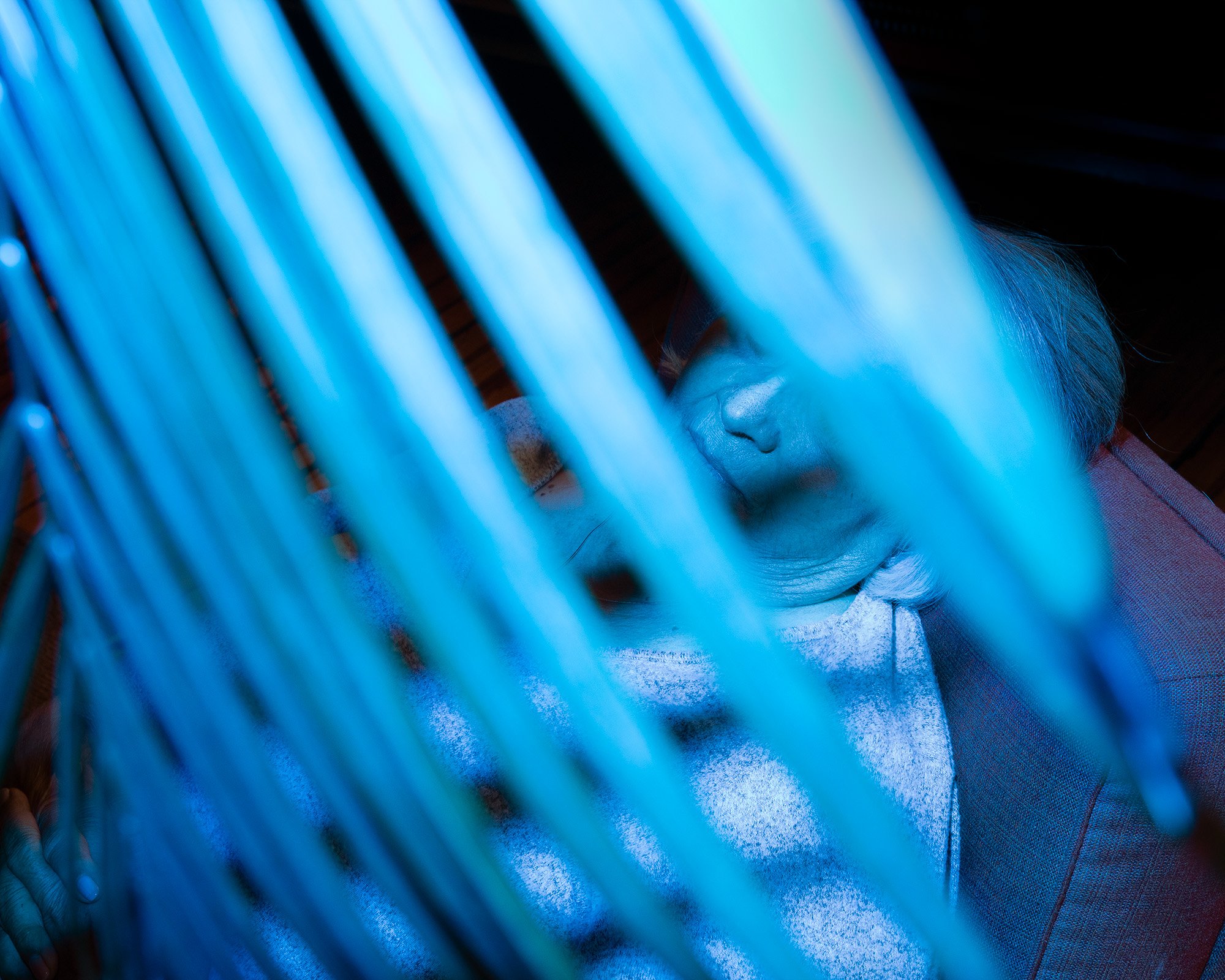

The final process is less constrained. It is the continued effort of mine to photograph, find, and create traditional digital images to support and complicate the results of the first three processes. In this work, it led to the creation of sets of digital images. One traditional and one more experimental utilizing a modified digital camera to capture the Infrared spectrum. These images are crafted with the use of Apple FaceID dot map projections. In one hand I would photograph and in the other, I would hold my phone as a projector of invisible light.

Individual works can be found below installation pictures.

Installation

Included Works

All inquiries: emileafpatel@gmail.com